Prev

Next

Prev

Next Site Map

Site Map

Home

Home

| . | . | . | . | David McCracken |

IDT Software Development Engineerupdated:2016.07.13 |

IDT makes integrated circuits. It originally fabricated its own digital ICs but its product line is increasingly directed toward foundry-fabricated mixed-signal devices with a special focus on mobile applications. It had acquired Leadis, a capacitive touch button IC maker, and wanted to extend the basic technology to support capacitive multi-touch. Leadis had patented and demonstrated a unique single-layer multi-touch technology, which depended on relatively demanding signal processing in the controller. IDT would know how to transform the prototype into a cost-effective product.

I was hired to help perfect the performance of the touchscreen controller and to make it cost-effective. The first device had already been fabricated in parallel with continuing firmware development. This was feasible because the firmware was in flash memory and could be changed at any time. However, by the time the device was ready, the program had already exceeded available memory. It was hoped that my code optimization experience (e.g. 90% reduction of Elo’s WinCE API code) might help with this problem.

When I arrived, the touchscreen program manager had not quite finished his preparations for me and I was asked to spend the first week helping out with a more speculative project. The button touch group was demonstrating a touch-based pointing concept where an array of miniature touch buttons functions like a trackball. The primary target for this was RIM’s Blackberry and its imitators. The Windows program used in the demonstration was slow and confusing. Gathering the hardware and software needed just to recreate the demonstration consumed the entire week during which I decided that the program was unsalvageable anyway, being written in BASIC and depending on undocumented DLLs with no source. I didn’t want to be unproductive in my first week so, using a USB communication monitor, I reverse engineered the undocumented communication protocol and, over the weekend, wrote a new program in C++. It was ten times faster and made sense. When I demonstrated this on Monday, I became the project’s new owner and did not return to the touchscreen for a year.

See [Pointer video]

The original goal was to make an 11-mm square flat area that could replace a mechanical or optical trackball but, unlike either of those, could be completely sealed inside the case of a handheld device, could be fabricated by simply etching pads on a PCB used for other circuitry, and would be cheaper. These were difficult goals but even more demanding goals were added as the project progressed. Cell phone makers wanted an 8-mm square device. Remote control makers wanted the device to operate through 1.6-mm thick ABS. Some OEMs wanted it to feel and even look exactly like the optical trackball, while others wanted it to feel like a mechanical trackball or a joystick.

The nine miniature touch pads in the original prototype do not afford sufficient resolution for a pointing device. I initially tried to use time as a resolution multiplier. I programmed the demonstration to show continuous movement in the direction indicated by a button pad as long as the user touched it. Joysticks do this. Pressure sensitivity affords them higher resolution but this can’t be fully exploited because of the difficulty that most users have controlling finger pressure. Testers who were comfortable with joysticks found the new device usable and everyone else said it was horrible. In any case, this time dependency is one reason that most people don’t like joysticks.

I considered interpreting swipe gestures as larger, speed-dependent displacements but discovered that most swipes on such a small device contain only approaching and receding touch data, which is unreliable and discarded by all other capacitive (and short depth of field optical) devices. In fact, even determining the static fully touched position was not always easy. The button touch controller used in the prototype only indicated whether the capacitance seen by a button exceeded a touch threshold. A large finger pressing firmly at any position could activate all buttons, paralyzing the pointer.

I had increased the effective resolution slightly by reporting a position half way between two active buttons. Five by five resolution is not significantly more useful than three by three but it gave me an idea. In effect this was a crude interpolation. The actual capacitance values seen by two opposing pads could be read and the difference scaled to indicate an interpolated position between them. Instead of dividing the position range in half, the differential resolution would be the product of the two buttons’ ranges. Although normally used just for testing, the button controller’s raw capacitance values can be read. The controller affords several alternative ranges, with higher resolution coming at the expense of acquisition speed. A range of 512 was being used, giving a differential range of 262,144. Precision and linearity are best in the middle of the range. A 100-point segment in the middle of the full range yields a precise differential range of 10,000.

In addition to extremely high resolution, the differential capacitive method exhibits the benefits normally associated with any type of differential measurement, particularly immunity from common mode noise. Common mode noise in this case is not just electrical but also background capacitance. The latter is so significant that, without the button chip’s periodic recalibration to compensate, even the gross on/off button state determination becomes unreliable. The differential method doesn’t need this compensation even while delivering a precise 10,000-point range. This characteristic is crucial to meeting the requested operation through 1.6-mm ABS because it enables increasing sensitivity with impunity.

The differential capacitive pointing device uses only four pads instead of the nine used in the original concept. For cost-sensitive markets, this is significant. It means that the controller will need fewer pins and a smaller AFE (analog front end) die area. Less obvious, but also significant, is a faster data acquisition cycle when a single CDC (capacitance-to-digital converter) is shared by all channels.

Operating systems expect user pointing input to come from a mouse, touchscreen, or digitizer pen. To be useful, a device that is not one of these must emulate one of them. The most ubiquitous and best match to the differential capacitive device is mouse. Mice connect to computers as USB-HID-mouse devices. In embedded products, peripherals like mice typically connect to the main CPU via I2C or SPI, but USB-HID still defines the usage patterns. OEMs often develop and demonstrate new products on a computer with a program emulating the product, which will eventually be mass produced in a highly optimized and much less flexible form. Even with no intention of making the device as a USB-HID product, it needs to be amenable to this form for OEMs to evaluate and use it.

Before going to the considerable expense of manufacturing the new device, IDT needed to demonstrate it to potential customers. The most obvious vehicle would be a circuit that emulates the anticipated IC while presenting a USB-HID-mouse interface. To emulate the IC, I could combine any of our capacitive button controllers, for just its AFE, with a microcontroller with an ARM M0 core, which is what we planned to use for our IC. The NXP LPC111x series uses an M0 core but has no USB versions. The LPC134x series has USB but uses an M3, which is more expensive than we wanted in our core but no problem for the emulator. The LPC1112 and LPC1342 were cheap enough to possibly be used in two-chip pilot products while getting the single-chip device into production.

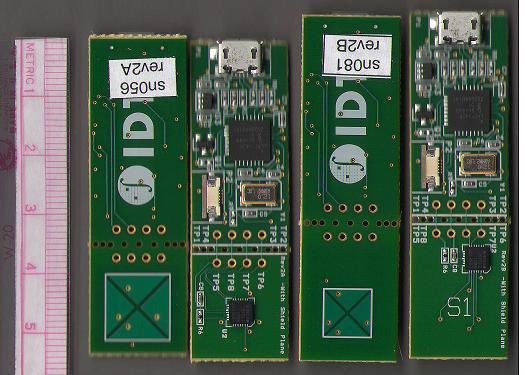

All of IDT’s capacitive button controllers and many other ICs communicate by I2C and/or SPI. It was only a little more work for me to design a flexible development and demonstration system, capable of supporting all of these products, instead of a dedicated emulator for the new pointing device. I made a small PCB with two sections. One section contains the LPC1342, USB interface, and a debug port (reduced pin count serial JTAG). The other contains the button chip and input pad. The two sections communicate by I2C across a breakaway boundary. Breaking off the touch section frees the controller to connect to any I2C device. The controller’s SPI signals are brought out to solder pads for a less convenient but still accessible SPI interface.

While initial experiments showed remarkable rejection of common mode effects, I could not predict the effect of near fields produced by the controller IC (initially the button controller used just for its AFE) and the wires between this and the pads. To develop an understanding of these effects, we made a variety of pad sections, locating the IC under the middle of the pads or to the side; with no shield, grounded shield, or driven shield; with input areas ranging from 7-mm to 15-mm. These were quick and easy to design. PCB panels had to contain multiple units, which were too small to produce individually, so we put multiple variations on each panel. Many of our customers were going to break off the pad section anyway so we gave them the CPU section of inferior pad designs. Shown here are the pad and component sides of two variations, 11-mm with controller under the pad and 8-mm with controller to the side and a grounded shield.

In order to work in the OEMs’ development environments the differential capacitive pointer development/demo would have to identify itself as a USB HID-mouse. But before it could be shown to anyone I had to develop extensive firmware for it. All capacitive touch devices require significant signal processing to deliver useful information. This device needs more than most. It would be very convenient to do basic algorithm development in Windows using the USB-attached unit. While it might seem obvious to do this with the device as HID-mouse, this would not work. Windows takes control of any device that attaches as HID-mouse. A normal program can’t even open an IoCtl interface to it. If it attaches as USB vendor-specified, it needs a kernel level device driver. It is not especially difficult for me to write a device driver but it is a lot of work to do for something that will only be used temporarily. Further, I wanted to demonstrate the device throughout its development without having to ask evaluators to install an unsigned driver. The most reasonable solution was a HID vendor-specified device with a unique application level HID driver.

I had been working on my HID driver to support prototypes even before designing the LPC1342 unit and needed it to support several different devices, including one whose VID/PID could not be changed. Instead of hard-wiring any of these, my driver enumerates HID devices and communicates with any willing IDT device, including ones unrelated to my new hardware. I implemented generic control and communication capabilities in the driver but no application-specific functionality, enabling its reuse for any cooperating IDT product.

My plan, which did succeed, was to develop increasingly sophisticated control capabilities in a Windows application, migrating lower levels to the LPC1342 as needed for performance. Eventually, all firmware necessary for mouse emulation would reside in the LPC1342 and it could identify itself as HID-mouse. This simplified development for me, made it feasible to give OEM customers functional demos throughout the development phase, and provided a painless reverse path to correct or add new algorithms in Windows instead of the less powerful controller development environment.

See [Pointer video]

Given the extraordinary resolution of the differential capacitive device, I initially mapped it to encompass the entire display. This afforded very fast pointer movement but made precise positioning difficult. The smallest jitter produced large movement. I developed an adaptive FIR (finite impulse response) touch position filter, which nearly eliminated jitter when the finger wasn’t moving without slowing the response to fast movement. However, this did not solve the precision problem. Users slow their finger movement as they approach a target in order to avoid overshoot. But the adaptive filter, by design, responds by becoming more aggressive, which inherently increases lag, exacerbating the overshoot problem. This is an inescapable problem. The device cannot be directly mapped to the display. It must be a displacement device.

As a displacement device, finger movement can be arbitrarily scaled to any amount of pointer movement in the display. Displacement devices normally increase the scaling factor according to speed of movement, but that doesn’t work very well for small devices. Speed determination inherently lags movement because it requires at least two samples. To be responsive, the device needs to report movement immediately and it can’t go back in time to change what it as already reported. Speed-based scaling has to apply to subsequent movement. However, a small input area may not provide many more samples before the finger leaves, affording the scaling increase little opportunity to serve its purpose. Using a smaller distance to calculate speed reveals that as the sampling distance decreases the speed becomes increasingly erratic. If acceleration varies accordingly, scaling continuously expands and contracts, producing an overall effect of no acceleration at all. This is a physiological phenomenon, confirmed by optical trackball engineers, who have also told me that they have not been able to find a good solution. A common user complaint about those devices is that acceleration is slow to respond to speed changes but then changes by too much, making the transition region uncontrollable.

I solved the acceleration problem using control theory. In the same way that PID (proportional-integral-derivative) combines errors sampled over different time periods to produce rapid but smooth control with minimal overshoot, I combined several temporal views of touch movement to create continuously variable acceleration with no lag. However, the basic control mechanism is not PID and does not prevent target overshoot. This cannot be done using only speed.

Touch target overshoot appears as rubber-banding. Realizing that the pointer has gone past the target, users suddenly and rapidly reverse finger movement, triggering increased acceleration and consequent reverse overshoot. Increasing frustration causes faster movement and more rapid reversals, making the problem worse. I developed a means of detecting and measuring the severity of this pattern. Reduced to a single value, it could be included in the control formula. As in any multiterm control mechanism, the influence of each term is determined by an adjustable coefficient. If a user is uncomfortable with the amount of rubber-banding, the coefficient of this term can be increased, decreasing rubber-banding but at the expense of slower acceleration response.

For a miniature touch pointer, speed of finger movement is the most important example of a sub-perceptive gesture; something that nearly all users naturally do expecting the same result. Obviously, faster touch should move further. Rubber-banding is the second most important sub-perceptive gesture. These gestures are strongly at play in nearly all contexts. Other sub-perceptive gestures are more subtle and/or more limited in scope. For example, if the finger is moving rapidly toward the edge of the input pad and then stops at the edge but doesn’t reduce pressure, it is likely that the user wants to move further but is thwarted by the “end of the world” effect. I developed formulas to detect and measure the severity of a number of these. Any combination of sub-perceptive gestures can be combined to control acceleration. My demo/development application includes a control panel with sliders to set acceleration control coefficients. Sub-perceptive gestures that are difficult to explain are either presented with technically fuzzy but effective definitions or merged with phenomena more widely understood so that testers can vary the response even if they don’t fully understand it.

See [Pointer video] [Touch Flick Algorithm Development] [Big Data Analytics]

For ordinary pointer movement in any touch device, the finger touches down, moves, and eventually untouches. If the duration of this sequence is very short, it is customarily interpreted as a flick (a.k.a. swipe) gesture, which can have special meaning. An elevation plot of typical flick finger movement shows a flat-bottomed V. The user perceives the entire trajectory as the gesture but practical devices only use the flat section because data in the approaching and receding (often called touch and untouch) periods is unreliable. With touch screens and pads users quickly (nearly subconsciously) learn how long the flat section must be to register as the desired gesture. The width of a miniature device is approximately the length of a typical flick with no flat. To recognize flick, the device must use the touch and untouch data, which other devices discard.

I built into the development program a raw capacitance recorder, which I used to capture the thumb and finger eight-way (N, NE, E, etc.) flick patterns of many users. Analyzing these for common patterns, I developed more than 20 different algorithms for interpreting the data as the angle, length, and speed of a flick. I also built into the program a test mode where the user makes specified flicks, against which all algorithms are tested. With varying directions, speeds, and fingers (especially thumb vs. finger) and many flicks from many users, the algorithms were statistically analyzed and ranked for reliability overall and in specific categories, such as vertical (N) thumb movement, which is notorious for “rollback”. Several of the best algorithms achieve 80% accuracy but none are better than 85%. This is not good enough for a product. However, when the best ones fail, they all give a different answer. When the best algorithms agree, they are 100% correct. Doing nothing when they disagree would at least avoid stupid answers but we can do much better. When they disagree, less generally reliable algorithms have the right answer either individually or collectively.

I implemented an efficient voting mechanism where the best algorithms are initially invoked. If they agree the analysis is done and the flick is reported. If not, additional algorithms are invoked in ranked order until the collective vote reaches a certain confidence level. The result is 100% correct unless the group can’t decide, which occurs about 1% of the time for most users. The results are slightly worse if tap is included as a possible outcome. Tap is a flick with little movement, which requires a more accurate distance determination.

The flick algorithms are computationally cheap but I have made them even cheaper by caching common intermediate terms. However, testing has shown little improvement if the first dozen can’t reach a decision, so the process gives up at that point. The differential capacitive pointer is an OEM component that may go into product environments that vary in many ways, such as plastic overlay thickness and type, size of the touch pad, and whether the thumb is a dominant input means. Testing shows that only the ranking of algorithms is sensitive to such variations and that ranking doesn’t vary by individual unit. The variation is not so significant that the optimal ranking for one design isn’t reliable for another. Consequently, a good default order can be achieved but personalization can improve the performance in any given product. My demo/development program contains a facility for the OEM to automatically tune a prototype or first article and save the ranking in a configuration file. The ranking is a small list of numbers, which can be programmed into flash or OTP of the production IC by the OEM or IDT or it can be downloaded into RAM by a host controller at boot time.

The demo/development program serves many purposes. It can immediately provide simple communication with other IDT products through the USB-I2C/SPI translation capability of my demo board as well as provide reusable functions and a model for more specific support programs for those devices. It supports two previous generations of interface units with different USB-I2C/SPI translation controllers in addition to my final design. It supports the use of any of IDT’s button touch devices, each of which requires unique initialization, as the AFE for the differential capacitive device under development. It serves as my basic device research tool and as OEM customers’ development and test facility. It is a marketing demo for cell phone, its primary market, as well as for other markets with widely varying requirements in terms of resolution, precision, gesture use, size, acceleration, emulation of other devices, etc. Hundreds of characteristics are determined by the demo target hardware and hundreds more are flexible and adjustable through multiple program dialogs. Not counting the conceptually infinite range of many of the parameters, there are many thousands of reasonable combinations.

Breaking the program up to reduce the complexity of its options would necessitate constant diligence to propagate improvements and keep all programs consistent. Using version control branches to handle variations would have the same problems. No IDT-managed scheme would address the variations that OEM customers might want to test for themselves without having to ask permission. The only logical solution is an orderly, consistent configuration system for every option, configurable capability, and adjustment. For this, I designed a declarative configuration language and functions to parse and manage a configuration file. Named groups of characteristics and groups of groups can be combined to make a complete configuration. Inheritance and hierarchical composition make any group, including the group that represents the entire configuration, essentially a derived class with no pure virtual elements. Everything specified in a group overrides something otherwise inherited. This makes minor configuration variations trivial without restricting the power to uniquely define all characteristics of a particular configuration.

The regularity of the language is reflected in corresponding structures in the program and functions that read and write the configuration file. The demo/development program itself edits the file automatically in response to changes that the user makes from the GUI. Most users don’t need to know anything about the configuration language or the file, but the file can be directly edited, compared, and ported. When an OEM customer reports unexpected behavior, a simple inspection of the configuration file usually reveals the cause. Any combination of existing parameters can be defined, named, and used as a single object without changing the program. Adding a new parameter requires a program change but this too is declarative. Functions exist for reading and writing all classes of parameters. A new parameter is created by adding its declaration to the appropriate table. It automatically inherits all of the configuration management methods of that parameter class.

I often shrink programs that I inherit to half their original size and sometimes to as little as 10% of the original. Such a drastic change can only be achieved by architectural changes, such as eliminating cut-and-paste, replacing control flow with algorithms, and increasing the proper use of common code. I usually don’t waste time on compiler tweaks and peephole optimizations, which can’t reduce code size by more than about 15%.

Examining the touchscreen firmware, I found very little of the bad coding practices easily corrected by my standard improvements. A complete redesign might have achieved the require reduction but customers were waiting for the next firmware release, which did not fit into available memory. I had little choice but to try to buy the required reduction with compiler directives and by exploiting local opportunities in the code. To measure the effectiveness of any tweak I needed to know the resulting memory footprint. To find reduction opportunities in the code I wanted to rank functions by memory footprint. These statistics are not reported at any point in the build process. I wrote xp-bat scripts to pass raw information extracted (using a GCC utility) from object and link files through GREP and AWK commands to compute the statistics I wanted.

The firmware programmers had already turned on all of the compiler’s simple size optimizing switches but they didn’t understand and didn’t change the defaults of parametric options. For example, the GCC compiler inlines functions below a certain complexity specified by the option -finline-limit=n, where n is a loose measure of complexity. Inlining reduces code size if applied correctly. To find the best setting for this program, I wrote a script to build the program multiple times, increasing n each time. Each time my total program size script was invoked. The size/threshold function was continuous and essentially parabolic. I used the threshold at the inflection point as n, whose default value was far from ideal.

With compiler/linker options and code tweaks contributing roughly equally, I was able to reduce the code footprint by 10%. This was enough to avert the immediate crisis. If most size optimization build options had not already been turned on or if the original code had not been reasonably good, the improvement could have been more significant. However, truly significant size and performance improvements cannot be done as an afterthought but result from basic architecture.

See [User’s Guide and Tutorials] [Repository creation flow diagram]

The touchscreen firmware was under haphazard version control. Divergent branches were not controlled and some of these had gone into OEM products. Since the supposed trunk version hadn’t gone to any customer, it was not clear which branch really was the trunk. Only one programmer was making a serious attempt at version control but he had set up a Tortoise SVN repository for only one branch and had not linked it to Eclipse, which all of the other programmers were using but which he hated. I was asked to make some sense of this.

The best long-term solution to the branching problem would be to replace branches with build- or run-time options. Branching should mostly be used to provide a temporary venue for developing cross-module code without breaking the shared version. I planned to merge all of the branches into one trunk but I didn’t want to discard branches that had already been published. I interviewed all of the programmers to determine what code bases they thought they were working on. I examined all of their processes to find things that they were inadvertently using. I reduced the number of code bases by merging duplicates and deleting ones that were not used or distributed. This still left several branches and no obvious trunk. To merge these into a unified repository, I chose one, based on programmers’ preferences, to be the trunk. The others would all be branches, which I would merge “back” into the trunk and seal to prevent further changes. Programmers would be allowed (but not encouraged) to make new branches off the unified trunk while I worked on the code to support options in lieu of branches.

Any version control system would work once the final schema was settled but I wanted to include the initial transition itself in the repository. The rigid organization and naming conventions of many systems would make this difficult but Subversion makes it easy. This is fortunate because the lead programmer refused to use anything but Tortoise SVN. For the others, Eclipse has a Subversion plugin. Subversion itself has a native command line interface. All of these interfaces can share a repository.

I had to develop and test not only the final schema under all three interfaces but also the transition process and all while the programmers continued to modify the sources. To do this, I moved all programmers’ private workspaces onto the network and wrote a script to take a snapshot of everything that would go into the repository. I wrote a script to automate the entire creation process. Normally, a repository is created and then grows in complexity as it is used. In this case, the complexity already existed and inserting it after the fact required simulating the various operations that would have occurred normally over time. I also wrote scripts to test the schema at various stages automatically by simulating shared use via the three different interfaces. After thorough testing, I told the programmers that the next snapshot would be their last under their private systems and initiated the process, which automated everything from snapshot to final repository. It finished in five minutes. I had given each programmer a script to execute locally to transparently switch over to the new system and a document describing the system and how to use it. It contains a short setup guide for all users and a separate tutorial for each type of interface, Tortoise SVN, Eclipse Subclipse, and command line. I explain the design and the origin of the code base to help the programmers see how what they were previously doing had not been discarded but merged into a larger organization.